Shipping AI at Ludicrous Speed: My Deployment Playbook

October 21, 2025

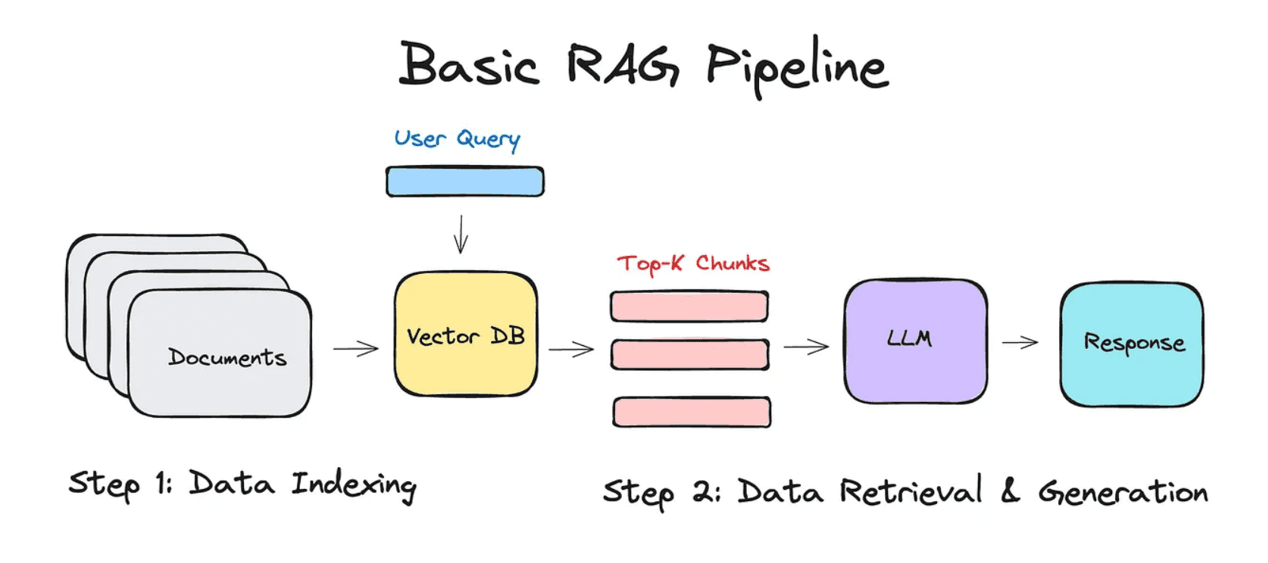

Launching AI features fast isn’t about hero hacking—it’s about having a pipeline that refuses to waste effort. Here’s how I ship new agents, RAG workflows, or inference endpoints without breaking a sweat.

1. Prototype in a Disposable Playground

I begin in a Jupyter lab built inside a container image that’s versioned with uv. Every experiment is tracked with Weights & Biases, and prompts live in a dedicated .prompt folder. The rule: if it’s not reproducible, it doesn’t ship.

2. Crank Out Guardrailed APIs

Once the model feels legit, I wrap it in a Hono microservice that lives on Vercel Edge Functions. Request validation + abuse throttling happens with Upstash Rate Limiting, and all outputs get scored by a tiny toxicity classifier running on Modal.

import { Hono } from "hono";

import { detectToxicity } from "@/lib/moderation";

const app = new Hono();

app.post("/infer", async (c) => {

const payload = await c.req.json();

const result = await detectToxicity(payload.input);

if (result.flagged) {

return c.json({ error: "Nah." }, 400);

}

// call inference worker here

return c.json({ output: "Ship it." });

});3. Observe Everything

Logs stream into Grafana Cloud via OpenTelemetry, and I’m obsessed with synthetic tests that replay critical prompts hourly. If degradation slips past alerting, the rollout auto-pauses using a Vercel deployment hook.

4. Market Like a Scientist

Every launch ships with a changelog card, an SEO-focused blog post (like this one), and a tweetstorm.mdx snippet I can paste into X or LinkedIn. Users get the sweet features; search engines get the exact keywords.

Want a copy of the pipeline template? DM me on X (@HOTHEAD01TH) or just view the live version on xaid.in.